Experiments in concurrency 4: Multiprocessing and multithreading

Okay, back again. Thus far, we've seen the limitations of a single-threaded architecture in achieving concurrency (Part 1). Then we saw how coroutines (Part 2) and an event loop with non-blocking I/O (Part 3) can help with this. So, next question: what happens when we go beyond the single-threaded model?

There are two other ways we can achieve concurrency if we move outside of a single thread: multithreading (using multiple threads in the same process) and multiprocessing (using multiple processes). Let's experiment!

Concurrency and parallelism

First, a little step back—what really is the difference between these two?

In Part 1, I said:

Concurrency doesn't mean doing multiple tasks in parallel. Rather, it involves a number of strategies that let you smartly switch between those tasks so it looks like you're handling them at the same time. The major benefit of concurrency is to not keep any one person waiting for too long.

Let's explore that for a bit. I came across an awesome analogy some time back that made me understand the difference:

Scenario A: Suppose you go to a restaurant, and there's a long line of customers. Each person tells the attendant their order, the attendant goes to the kitchen to make the meal, then comes back and gives the customer their meal. This means each customer has to wait for all previous customers to be fully served before they can be attended to. When the line is long, it gets frustrating; customers often get tired of waiting and leave.

Scenario B: Now, imagine that the restaurant wants to improve efficiency, so they add a chef that stays in the kitchen. You tell the attendant your order, they pass it to the chef, who makes it. While the chef is making the food, the attendant takes the next customer's order, and so on. When the chef finishes a meal, the attendant hands it to the customer that ordered it and completes their order; meanwhile, the chef starts preparing the next meal. Now things will be a bit less frustrating. You still have to wait for the chef to prepare your meal, but at least you get attended to sooner.

Scenario C: Imagine that instead of adding a chef, the restaurant added a second attendant. You still have to wait for all earlier customers to be fully served before you, but at least there are two lines now, so your wait time should be shorter.

This is a good illustration of concurrency and parallelism. The attendant is our app or webserver handling requests. Scenario A is the exact scenario we faced in Part 1—PHP's single-threaded synchronous nature means each request has to wait for previous ones to be processed fully.

Scenario B is what happens when you throw in concurrency and async I/O. Now, the I/O operation (making the meal) is delegated to the OS, while the main thread keeps handling new requests. When the I/O operation is done, the server sends the response.

In Scenario C, we have parallelism—there are two servers (processes or threads), so customer requests are truly being handled in parallel.

And that's not all. We can combine concurrency and parallelism (add more attendants and more chefs) to get even better results.

(I can't remember where I read this analogy, but it was the one that stuck. If anyone knows the source, please let me know.)

Threads and processes

I don't know enough computer internals to define them precisely,😅 but here's what I've gleaned:

Processes are tasks your operating system runs. When you run node index.js, you start a new process. When you open your browser, you start a new process (which can in turn start other processes).

A thread is also a task, but a smaller unit. Think of threads as components of processes. Every process will have at least one thread.

Explaining threads is more involved, because "thread" can refer to different things. Threads in your programming language might be implemented differently from threads in your operating system. But some things are typically agreed upon:

- Processes are isolated—they don't share memory or resources. If you declare a variable in one process, you can't access it from another process. Threads, on the other hand, can typically share memory.

- Switching between threads is cheaper than switching between processes.

This article goes into more detail about threads and processes.

Alright, setup done. Let's try out some experiments.

Experiment 1: What's the cost of context-switching?

We just mentioned that it's cheaper to switch between threads than processes. Can we test that?

Time to run a simple test. We'll use Ruby, since it has native threads. We'll use Ruby's benchmark module to measure the time it takes to start a new process/thread and return to the main one.

require 'benchmark'

b1 = Benchmark.measure do

Thread.new { puts "in thread" }

puts "in main"

end

b2 = Benchmark.measure do

spawn("ruby", "-e puts 'in spawned process'")

puts "in main"

end

b3 = Benchmark.measure do

Process.fork { puts "in forked process" }

puts "in main"

end

puts "switching threads: #{b1}"

puts "switching processes (spawn): #{b2}"

puts "switching processes (fork): #{b3}"

Here we're running three tests. Each prints a simple string by either:

- starting a new thread

- starting a new process

- forking this process into a new process

Forking a process is a way of starting a new process by copying the current one, including its memory.

Before we even run this, we can guess that test 2 will be slower than test 1: to start a process, we need to leave Ruby and ask the OS to run a new ruby command. By comparison, to run a thread, we stay in Ruby-land, and just pass a block.

Here's a sample of what I get when I run this on my system:

in main

in main

in main

in forked process

in thread

switching threads: 0.000064 0.000000 0.000064 ( 0.000064)

switching processes (spawn): 0.000146 0.000000 0.000146 ( 0.000282)

switching processes (fork): 0.000456 0.000000 0.000456 ( 0.000462)

in spawned process

(Note: Process.fork() isn't supported on Windows, so I'm running this in WSL.)

The value in brackets is the time taken in seconds. Let's compare our results:

- Starting a new thread and switching back takes 0.06ms.

- Spawning a new process and switching back takes 0.28ms (5x slower).

- Forking the process and switching back takes 0.46ms (8x slower than threads).

Note that these results are not definite. When I run this on Replit, forking is sometimes faster than spawning and sometimes slower, but threads are always much faster. Different factors will affect this behaviour, such as the operating system and how it implements processes and forking, the current load on the system, or even the Ruby version.

Either way, it's clear that switching between threads is much faster. Still, the cost is pretty small, even for a process (not even up to 1ms). However, there's also a memory usage cost (which we didn't consider here). If you're creating a lot of threads/processes, these costs will add up.

Experiment 2: Do they run in parallel?

Do threads and processes actually run in parallel (ie at the same time)? Or does the runtime/OS just switch between them quickly enough? This is not an easy question to answer, for several reasons.

For one, switching between tasks is a foundation of computer multitasking. Historically, computers only had one processor, so that was the only way to multitask. Nowadays, computers have multiple physical processors (dual-core = 2 processors, quad-core = 4, etc), and so we can now actually run multiple processes at the same time. And then there are logical cores, which (I think) allow a physical core to run more than one process at a time.

The CPU generally hides the implementation details from us. It determines how processes are scheduled, so we can't say for sure whether a process is being run in parallel on a different core, or concurrently on the same core.

With threads, it's also complicated. Since threads are implementation-dependent, we can't say for sure whether they run in parallel or not. For instance, in the MRI Ruby interpreter, there's a feature known as the Global Interpreter Lock (good read here) which explicitly makes sure that only one thread is active at a time (except during I/O). In others (JRuby and Rubinius), there's no such thing, so threads (theoretically) execute in parallel.

Still, I tried to do a rough "test" on my laptop. It has 8 cores (which is 16 logical cores), so I should be able to run 16 processes simultaneously. I made a file, test.rb, which would spawn 7 processes (to add to the one already running):

7.times do |i|

Process.spawn "ruby", "process.rb", "#{i}"

end

sleep 10

# Just some random work for the CPU

3876283678 * 562370598644 / 7753984673654 * 67659893

puts "Done main"

In process.rb, I was just sleeping for 40s, then doing some math:

sleep 40

3876283678 * 562370598644 / 7753984673654 * 67659893

process_number = ARGV[0]

puts "Done #{process_number}"

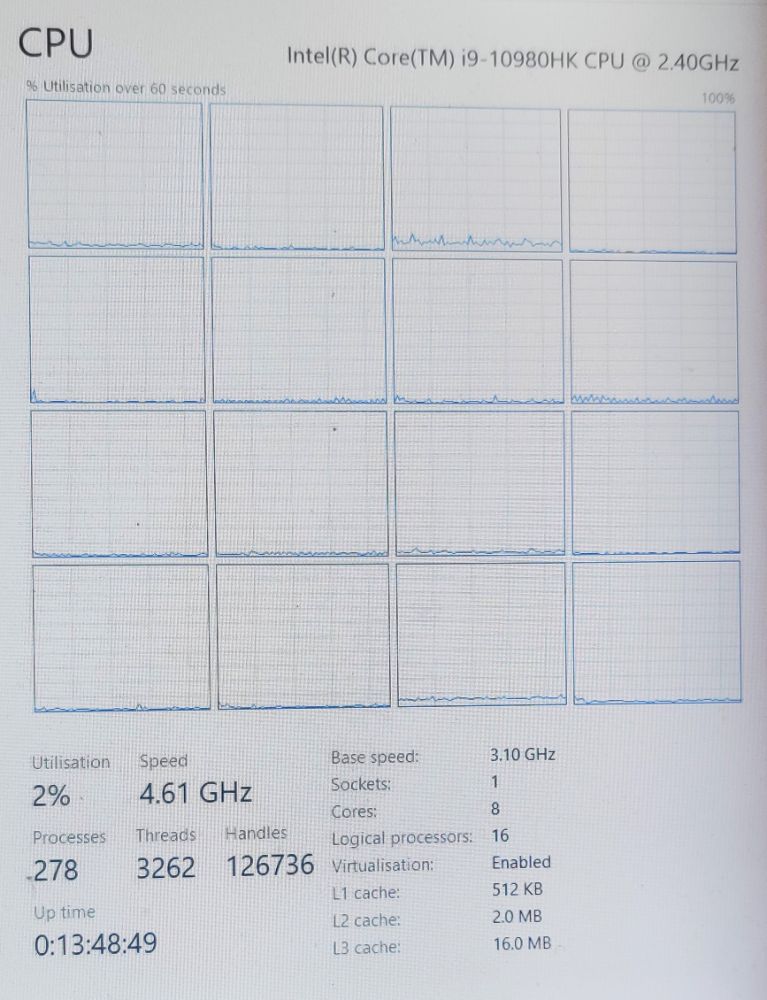

Here's what my CPU usage looked like before I ran the test. There's one graph for each logical processor:

(Forgive the photo quality, I had to take them on my phone to avoid the observer effect.)

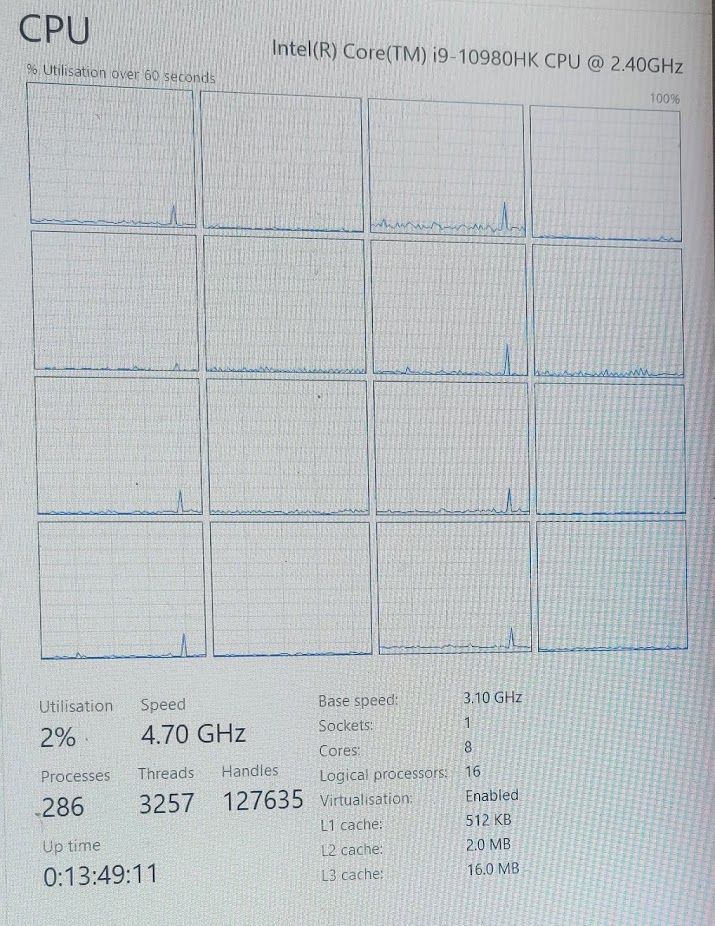

A few seconds later:

You can see eight little spikes on the graphs. This suggests that I'm now running 8 processes simultaneously, one on each core.

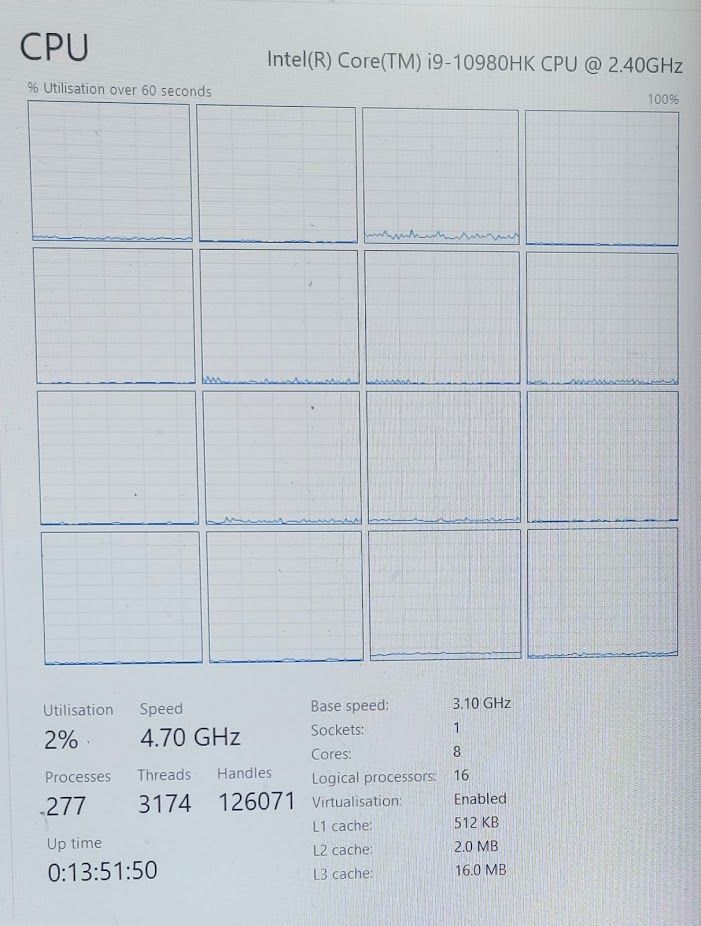

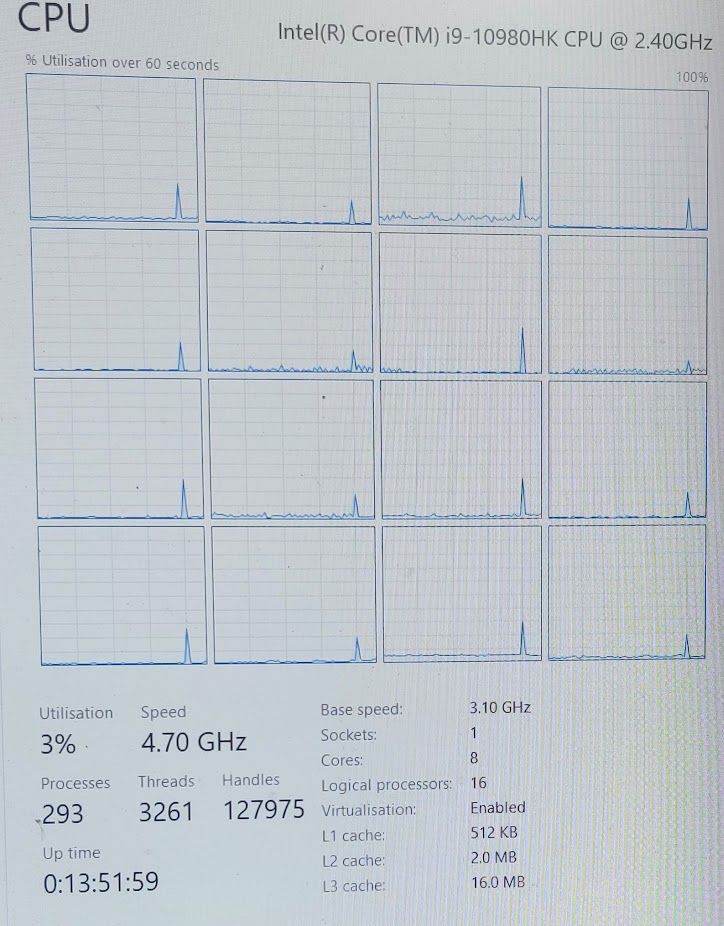

If I try with 15 + 1 processes (before vs after):

You can see that there are now spikes across each logical core, so it looks like the processes are being run in parallel.

By contrast, if I switch the script to use threads instead of processes, there's only a spike on one core.

This is a very unscientific experiment, but it's an interesting look at how the CPU schedules tasks. As always, it will vary across platforms, but multiprocessing seems to be the safe bet if you want to use all your CPU cores.

Experiment 3: How do they improve concurrency?

Let's see how using threads and processes can allow us handle more requests. Here's a multithreaded version of our webserver from last time:

require 'socket'

server = TCPServer.new("127.0.0.1", 5678)

puts "Listening on localhost:5678"

loop do

# Create a new thread for each incoming request

Thread.new(server.accept) do |socket|

request = socket.gets.strip

puts "Started handling req #{request}: #{Time.now}"

sleep 5

puts "Responding 5 seconds later: #{Time.now}"

socket.puts <<~HTTP

HTTP/1.1 200 OK

Content-Type: text/html

Content-Length: 9

Hii 👋

HTTP

socket.close

end

end

It's similar with processes:

# ...

loop do

socket = server.accept

# Fork a new process for each incoming request

Process.fork do

# This block executes only in the child process

request = socket.gets.strip

# ...

socket.close

end

end

Both of them give similar results when I test with autocannon --connections 3 --amount 3 --timeout 10000 --no-progress http://localhost:5678:

Listening on localhost:5678

Started handling req GET / HTTP/1.1: 2021-05-19 13:02:25 +0100

Started handling req GET / HTTP/1.1: 2021-05-19 13:02:25 +0100

Started handling req GET / HTTP/1.1: 2021-05-19 13:02:25 +0100

Responding 5 seconds later: 2021-05-19 13:02:30 +0100

Responding 5 seconds later: 2021-05-19 13:02:30 +0100

Responding 5 seconds later: 2021-05-19 13:02:30 +0100

Nice! We've got concurrency again, but without having to implement an event loop.

Other platforms

Let's move away from Ruby for a bit. Most programming runtimes allow you to spawn processes as needed, but support for threads is more varied.

PHP doesn't support threads natively. There's the pthreads extension, but that's been discontinued as of PHP 7.4, in favour of the parallel extension. PHP also supports forking processes (except on Windows).

Node.js doesn't support process forking, but you can spawn a process normally. It has threads, but the API is somewhat clunky:

const { Worker, isMainThread } = require('worker_threads');

if (isMainThread) {

console.time("switching threads");

new Worker(__filename);

console.timeEnd("switching threads");

console.log("in main");

} else {

console.log("in thread");

}

Node.js' thread implementation also seems much slower than Ruby's. I get around 6ms here, compared to the 0.06ms in Ruby:

switching threads: 6.239ms

in main

in thread

Generally, using threads in Node.js is not a thing, unless you're doing CPU-heavy work. That's because it's generally more efficient to operate in the main event loop, since I/O operations are internally handled in a separate thread. In fact, the docs say:

Workers (threads) are useful for performing CPU-intensive JavaScript operations. They do not help much with I/O-intensive work. The Node.js built-in asynchronous I/O operations are more efficient than Workers can be.

Comparing approaches

So we've seen three ways we can achieve concurrency. Which should you use? My thoughts:

-

Multithreading gives you concurrency benefits without you having to change your code to do async I/O (callbacks/promises, etc). Downside: threads share memory, so one thread can change the value of a variable for another thread, leading to unexpected results. So we have to make sure our code is thread-safe, for example, with a mutex. Additionally, depending on your configuration, an error in one thread can crash the whole application.

-

An event loop is useful when you want to stay on a single thread (and use less memory), but it means you'll need to switch to async I/O to get the real benefits. You don't have to worry about thread-safety, but you still have shared memory for all requests, so you'll want to avoid modifying global state.

-

Multiprocessing gives you parallelism. You can have eight servers running on your 8-core machine, actively handling 8 requests at the same time. The processes are also independent, so one can crash (and restart) without affecting others. The cost here is higher resource usage (CPu and memory). If you need to scale up to handle a lot of concurrent requests, multiprocessing will be a definite boost.

Most production webservers use one or more of these approaches. Spinning up a new process or thread for every request can get quite expensive (plus you might hit your operating system's process/thread limit), so they typically use pools — create a set of processes or threads; when a new request comes in, pick one from the pool to handle it.

In the Ruby world, there are several popular servers:

- Ruby's default server, WEBrick, can run as either single-threaded (with no event loop) or multi-threaded

- Goliath uses a single-threaded event loop

- Thin can be either single- or multi-threaded

- Falcon is multi-process, multi-threaded and event-looped with async I/O

- Unicorn uses process forking

- Puma is multi-threaded, but can also run as multiple processes, with each process having its own thread pool

As we saw in Node.js, typically we use the inbuilt server, which is single-threaded with an event loop. However, Node.js also supports multiprocessing servers via cluster.fork() (which does not fork a process). You start as many processes as you wish, and incoming HTTP requests are distributed amongst them. This is what process managers like PM2 use internally.

In PHP, you can use either an event-looped server like ReactPHP or a process manager like PHP-FPM. There's also PHP-PM, a process manager built on ReactPHP (giving you multiprocess + event loop).

Finally, in addition to these, you might also use a server like Nginx. Nginx uses multiple worker processes, which run an event loop and can use threads too. Nginx is a robust server with additional features like load-balancing and reverse proxying, so it's used with a lot of applications.

Thanks for following this series! If you need more reading, the guys at AppSignal have a really good series where they build a concurrent chat app using each of these three approaches.

I write about my software engineering thoughts and experiments. Want to follow me? I don't have a newsletter; instead, I built Tentacle: tntcl.app/blog.shalvah.me.