While writing my custom serialization format, I spent a while working with byte streams, strings and encodings, so I figured I'd do an article on that.

We deal with strings everywhere in code, but at a lower level, there really aren't any "strings". What we have is a sequence of bytes, just like all other data. The key differences:

- we decide that this sequence of bytes should be interpreted as text for reading

- we decide on a mapping for what bytes mean what characters

In fact, this applies to all data types, not just strings. For instance, a floating-point number such as 0.3 is a sequence of bytes; we just use a different standard to decide how we should store and interpret it. But back to strings.

Character sets

Let's start with the string "hello world". Pretend you're an early computer scientist, and you need to figure out a way to store this string in a computer's memory. Computers store data in bytes, which hold the numbers 0 and 1. How do you represent a string as numbers?

We can't assign every string in the world a specific number and store that, because there's an infinite number of strings that can exist. But the number of characters that make up strings is much smaller, and limited in size. We can assign a number (a code) to every character; for every string, we store the codes for the characters in the string, and then to read the string, we read the codes and look up the character it corresponds to.

This means we have to define a character set, a mapping that assigns a numeric code to every recognized character. It's the dictionary we consult to determine how to convert characters to numbers, and vice versa. The most popular character set, ASCII, maps 128 different characters to a number from 0 to 127. In ASCII, the letter h has a code of 104 (68 in hexadecimal), e is 101 (65 in hex), and so on. The full list is here, but in summary:

- codes 0-31 are non-printable characters like TAB (what you get when you hit the TAB key)

- codes 48-57 are the numbers 0-9

- codes 65-90 are the capital letters A-Z

- codes 97-122 are the small letters a-z

- all other codes are symbols like #, &, !

But you've probably already spotted a problem: in ASCII, character codes range from 0 to 127, which means only 128 possible characters can be represented. That mostly covers the English language, but many languages use other characters (like 大). And there are also mathematical symbols (±), and (today) emoji.

Aside: Why does ASCII support only 128 characters? The answer is an intersection of old tech (the telegraph) and new (the computer). ASCII was based on the existing telegraphing system, and at the time, they figured they only needed 7 bits, which can only hold 128 (2⁷) numbers. Also, it's the "American Standard Code for Information Interchange", so it makes sense that they focused on English characters. There are other character sets designed for other languages. But we'll focus on ASCII and friends because they started with English and the Latin/Western alphabet.

This is why we couldn't stop at ASCII. We got more character sets, like ISO-8859-1 and Unicode. ISO-8859-1 (also called "Latin1") is an "extended ASCII" charset; it adds a few non-English characters like ß to the basic ASCII set, bringing it up to about 200 characters (and 8 bits).

Unicode is the "modern" character set, supporting over 1 million possible codepoints, and is the reason why your computer or phone can render emoji and characters from other languages correctly. Unicode is maintained by the Unicode Consortium, and its character mapping system is more complex than ASCII's. It has tons of codepoints still available to be assigned to new characters.

Unicode encodings

We have a new challenge, though. One byte = 8 bits, which means a byte can only hold 256 (2⁸) characters. For an encoding like Unicode to support more than 256, it has to go beyond one-byte characters. And it does! A single Unicode codepoint can be up to four bytes. But we don't always need 4 bytes; a simple 5-character string like "hello" would take 20 bytes! There has to be some way we can use only the minimum number of bytes each character needs. The good news: there is. This is called a variable-width encoding, and there are two main ones in Unicode.

(By the way, the superscript I used above (⁸) is a Unicode character that uses 2 bytes (hex code: 2078.)

Aside: Hex codes.

We'll often refer to the hexadecimal (base-16, aka "hex") codes of characters. There's nothing special aboout hex; it's just common because it's very convenient, since it's a power of 2, and it helps us write bytes in a shorter way. For instance, the byte 11011001 is D9 in hex.

But first: an encoding is a way to represent a string. It's separate from the character set, which is just the mapping from character to number (although the terms are often used interchangeably). An encoding allows us to write a codepoint in a number of different ways, giving us some flexibility to fit our needs. There are three main Unicode encodings: UTF-32, UTF-16, and UTF-8. "UTF" means "Unicode Transformation Format", and the number paired with it represents how many bits are needed at minimum.

UTF-32 uses 32 bits (4 bytes) to represent every codepoint. It is a fixed-width encoding—whether or not the character needs 32 bits, it's going to occupy 32 bits. The unused bits would be zeros. Using UTF-32 is often a waste of space since most languages' characters fit into the first two Unicode bytes (the "Basic Multilingual Plane").

UTF-16 and UTF-8 are variable-width. They use a minimum of 16 bits (2 bytes) and 8 bits (1 byte) respectively to represent a codepoint. To represent codepoints larger than their range, they use special combination tactics, which we'll see in a moment. For example:

- The letter "h" has a codepoint of

68(hex). In the different encodings it looks like this:

UTF-8: 68 (1 byte)

UTF-16: 00 68 (2 bytes)

UTF-32: 00 00 00 68 (4 bytes)

- The emoji "😊" has a codepoint of

1F60A. In the different encodings it looks like this:

UTF-8: F0 9F 98 8A (4 bytes)

UTF-16: D8 3D DE 0A (4 bytes)

UTF-32: 00 01 F6 0A (4 bytes)

So UTF-8 and 16 default to 1 and 2 bytes, but can add more bytes if necessary. To keep things unambiguous, there are rules for combining code units and only certain units may be combined.

Aside: Character vs codepoint. I've mostly switched terms from "character" to "codepoint". There's a good reason: "character" can be ambiguous—is it the single symbol you see on your screen, or the numeric code representing that symbol? Also, some "characters" are not visible (like TAB), and some are made by combining multiple codes. "Codepoint" is clearer: a single numeric code (which may or may not be a character). Going forward, "character" will refer to what you see on screen, while "codepoint" is the number stored in the computer.

Representing multi-byte characters

Let's take a closer look at how UTF-8 and UTF-16 represent characters outside their range:

UTF-16 represents large codepoints by combining "smaller" ones via surrogate pairs. In the Unicode character set, certain ranges of codes are reserved for surrogates. By placing two complementary surrogates next to each other, we form a pair. The resulting codepoint is then calculated using a defined formula. For example, the emoji "😊" is the single codepoint 1 F6 0A in UTF-32. But UTF-16's code units are 2 bytes, and 1F60A is more than 2 bytes. So we use the surrogates D8 3D and DE 0A, which are two bytes each.

When reading this string, an application does something like this:

- checks the specified encoding. Sees that it's UTF-16, so it must treat every two bytes as one unit

- reads the first two bytes,

D8and3Das one unit,D83D(55357 in decimal) - checks for that character on the Unicode map and realises it's a surrogate

- reads the next two bytes as another unit,

DE0A(56842 in decimal). Checks that that is a valid matching surrogate (it is). - calculates the combined codepoint with the formula (in decimal)

65,536 + ((s1 - 55,296) * 1,024) + (s2 - 56,320), which is65,536 + ((55357 - 55,296) * 1,024) + (56842 - 56,320)= 128522 (or 1F60A in hex) - looks up the codepoint in its Unicode tables to get the symbol it should print, 😊(Unicode details here)

Pretty smart!

UTF-8's approach is a bit more complex. UTF-8 doesn't combine existing codepoints, but instead splits up the "big" codepoint and makes some changes to its bits. Wikipedia has an example describing this encoding process, but let's look at the decoding. Given the string "😊" in UTF-8 (F0 9F 98 8A). a piece of software would:

- check the specified encoding. Sees that it's UTF-8, so it must treat every byte as one unit

- read the first byte.

F0(240 in decimal) is greater than 127, so it knows we're dealing with a multi-byte character. - look at the bits in that first byte. The number of 1s at the start tells us how many bytes make up this character. Two 1s (

11) is a two-byte character, three 1s three bytes, and four 1s four bytes.F0in binary is11110000, so we're dealing with a four-byte value. We then discard those initial 1s, and keep the rest of the byte. - read the next three bytes (since we've already read one out of the expected four), and look at their bits once again. Since they are all parts of one character, they should each start with

10if the string was encoded correctly. Let's check:9F=1001111198=100110008A=10001010All correct!

- discard the initial

10s from each of those 3 bytes, and combine everything (along with the rest of the first byte), ie:0000 (byte 1, minus the initial 1111) 011111 (byte 2, minus the initial 10) 011000 (byte 3, minus the initial 10) 001010 (byte 4, minus the initial 10) - combine these bits into a single value,

0000011111011000001010, which is...128522 in decimal, or1F60Ain hex. We've got our original codepoint back!🎉 - finally, look up the symbol for that codepoint (😊) and draw it on the screen.

Grapheme clusters

But there's more. We can combine existing characters, not just surrogates, in certain ways to get new ones. A good use case is emoji. Emoji keep expanding, and many of them have similar patterns. For instance, we have the same emoji in different skin tones and genders. So rather than assigning a new codepoint to every possible variant of each emoji, some of these variants aren't codepoints. Instead, they are groups of codepoints interpreted as a single character (we call this a grapheme cluster).

To clarify, the difference between grapheme clusters and surrogate pairs is that you put the two surrogates' codepoints in a formula to get the single codepoint they refer to, whereas grapheme clusters don't refer to any single codepoint. Also, surrogate pairs are a UTF-16 thing, but grapheme clusters apply to all Unicode encodings.

The trick to making grapheme clusters is modifier characters and joiner characters. A modifier character might not be visible by itself, but when put next to another character, changes that character's look, while a joiner joins characters together. For example:

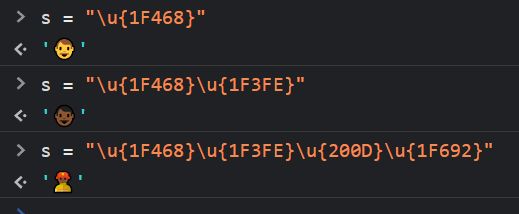

This is the "medium-dark" fireman emoji. It's a grapheme cluster, made by combining:

- the "man" emoji, 👨 (U+1F468)

- a skin tone modifier (U+1F3FE)

- the zero-width joiner (U+200D)

- the "fire engine" emoji, 🚒 (U+1F692)

The skin tone modifier makes the man's skin "medium-dark", while the zero-width joiner (aka ZWJ) combines the man with the fire engine to get the... ̶m̶a̶n̶f̶i̶r̶e̶e̶n̶g̶i̶n̶e̶ fireman. You can even see the stages of the composition yourself:

Sweet. Oh, and by the way, the strikethrough text above is only possible because of Unicode.

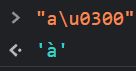

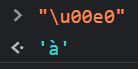

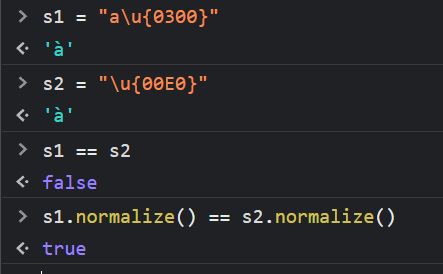

Similarly, you can get à (a with a grave accent above), by combining "a" with U+0300, a modifier character.

But this case is a bit moot because à also has its own codepoint (U+00E0), so you could just type that instead:

An interesting side effect of this is that if you have the first à, and you hit Backspace once, it will delete the accent, leaving you with just "a". For the second, single-codepoint à, hitting Backspace will delete the whole character. An unfortunate side effect is that even though the two accented a's look exactly the same, they're different codepoints, so they will return false for ==. We'll see more about this later.

Some other interesting grapheme combinations are country flag emojis: most of them are made by combining the emoji for the two-letter country code. For instance, to get Nigeria's flag, you'd combine the emoji for 🇳 (U+1F1F3) and 🇬 (U+1F1EC) to get 🇳🇬. You don't even need a joiner! Note that your operating system/font vendor chooses how to display an emoji, so on some sites and devices, you'll see the flag, but on Microsoft devices, you'll see the two letters. (See for yourself by visiting this page from your phone and a Windows PC.)

One final thing: the characters that make up a grapheme cluster can themselves contain surrogate pairs (in UTF-16). In fact, in the fireman emoji example, each of the codepoints (except the joiner) is represented by surrogate pairs because they are all greater than 2 bytes. So, in fact, in UTF-16, the fireman emoji is represented by 7 codepoints in total.

What encoding should I use?

The answer: probably UTF-8. It's the most widely used encoding, used by 98% of websites. In fact, if you're a web dev, you've probably written some HTML that starts with <meta charset="utf-8">, or seen a header like this Content-Type: text/html; charset=utf-8. And UTF-8 is compatible with ASCII: if a file contains only ASCII text and you read it as UTF-8 instead, you'll get the same thing.

Of course, this is for when writing text. When reading text, you should find out the encoding of the text and use that. It's probably UTF-8, especially if it's from the web, but you should check.

However, some software still use other encodings, for reasons ranging from history to security. We'll see them in a bit, but let's talk about encodings in different programming languages. All strings in a language have their own encoding (different from the one you specify when trying to read or write external text). This is important because string operations like checking the length of a string and iterating through characters in a string can give different results.

Let's do a quick comparison across languages, with a string made up of our earlier examples: "h😊👨🏾🚒 à". We'll check the length of this string, and try to split it into characters. To avoid language inconsistencies, we'll use the Unicode codepoint specifier form ("h\u{1F60A}\u{1F468}\u{1F3FE}\u{200D}\u{1F692}a\u{0300}"), rather than writing the raw characters.

JavaScript

In JavaScript, strings are UTF-16.

- Checking the length:

s = "h\u{1F60A}\u{1F468}\u{1F3FE}\u{200D}\u{1F692}a\u{0300}"

s.length // => 12

Even though, the user sees 4 characters, .length reports 12. That's because it counts individual codepoints (and it doesn't combine surrogates). "h" is one codepoint, "😊" is a surrogate pair, "👨🏾🚒" is a grapheme cluster of five codepoints, and "à" is a grapheme cluster of two codepoints.

- Splitting by codepoints:

s.split("")

// => 12 items ['h', '\uD83D', '\uDE0A', '\uD83D', '\uDC68', '\uD83C', '\uDFFE', '', '\uD83D', '\uDE92', 'a', '̀']

This works the same as with .length. You typically don't want to use this method, because it splits up the surrogate pairs and you can't use them for anything. A surrogate by itself is invalid.

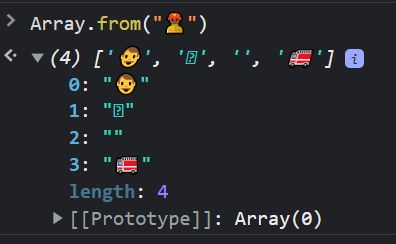

- Splitting by codepoints, but combining surrogates:

Array.from(s) // or [...s]

// => 8 items ['h', '😊', '👨', '🏾', '', '🚒', 'a', '̀']

This is better, because it keeps the surrogates together. Unfortunately, it splits up the grapheme clusters.

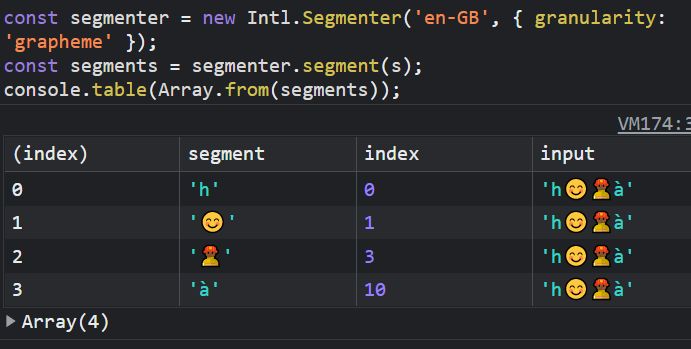

- Splitting by grapheme clusters (or the characters the user actually sees): JS doesn't support this natively, so you'll need a library like grapheme-splitter. There's a Stage-4 proposal in the works, though:

Intl.Segmenter:

One more thing: JavaScript's default string encoding is UTF-16, but when working with text from the outside world (files, networks, etc), JS often defaults to UTF-8. For example, most of the Buffer methods assume "utf8" as the encoding, and the Encoding APIs default to UTF-8.

PHP

PHP's default encoding is UTF-8 (you can change it with an INI setting).

Checking the length:

$s = "h\u{1F60A}\u{1F468}\u{1F3FE}\u{200D}\u{1F692}a\u{0300}";

strlen($s); // => 23

Interesting! JS had 12 "characters", but PHP has 23. This is because PHP's strlen returns the number of UTF-8 bytes, while JS' .length reports UTF-16 code units (each of which is 2 bytes). In UTF-8:

- h: 1 byte

- 😊: 4 bytes

- 👨🏾🚒: 15 bytes (4 for man, 4 for skin tone, 3 for joiner, 4 for engine)

- à: 3 bytes (1 for a, 2 for the accent)

There's good news, though. PHP has specialized multibyte functions:

mb_strlen($s); // => 8

print_r(mb_str_split($s);

// Array

// (

// [0] => h

// [1] => 😊

// [2] => 👨

// [3] => 🏾

// [4] =>

// [5] => 🚒

// [6] => a

// [7] => ̀

// )

Essentially, the mb_* functions keep the multibyte emojis, but break up the grapheme clusters.

If we want to keep the grapheme clusters, PHP's inbuilt intl extension has what we need:

grapheme_strlen($s); // => 4

$next = 0;

$graphemeClusters = [];

while ($next < strlen($s)) {

$char = grapheme_extract($s, 1, GRAPHEME_EXTR_COUNT, $next, $next);

if (empty($char)) continue;

$graphemeClusters[] = $char;

}

print_r($graphemeClusters);

// Array

// (

// [0] => h

// [1] => 😊

// [2] => 👨🏾🚒

// [3] => à

// )

Ruby

Ruby's default is also UTF-8, but it can be changed per string. Every string has an encoding property (we saw it in my Ruby serialization example!). Ruby also has a number of utilities that make it easier to convert between different encodings and check whether what you have is valid or not.

Let's play with our string:

s = "h\u{1F60A}\u{1F468}\u{1F3FE}\u{200D}\u{1F692}a\u{0300}"

s.encoding # => #<Encoding:UTF-8>

s.size # => 8

s.split("") # or s.chars

# => ["h", "😊", "👨", "🏾", "", "🚒", "a", "̀"]

So .size and .split report multibyte characters, but break up grapheme clusters. But because Ruby is Ruby, there's no need to worry:

s.grapheme_clusters

# => ["h", "😊", "👨🏾🚒", "à"]

Why is the encoding so important?

By now, you can see that the encoding of any text is extremely important. Encodings (and character sets) are used everywhere—databases, HTTP, all over your computer. Anywhere you need to deal with text, you need to know what encoding it was written in, otherwise you'll interpret the bytes wrongly and get a garbled mess.

Fun fact: SMS uses its own encoding, GSM-7. It's like ASCII, but not really, and can lead to odd occurences. For instance, SMS normally supports 160 characters per page, but if you add an emoji to your SMS, it changes to 70! Explainer from Twilio.

But just to be practical, let's see a few examples of how using the wrong encoding or not accounting for the encoding can lead to problems.

Validating string length

We've already seen how checking the length of a string can give misleading results. But this is an operation we do quite often. For instance, if you're building an app where users can have public display names, you'll want a maximum length. If you accept Unicode text (which you probably should), you'll want to validate this length.There are different possible "lengths":

- the number of grapheme clusters (human-visible characters)

- the number of codepoints

- the number of bytes

Also, note that your database has an encoding as well, so the number of codepoints and bytes will vary according to your database's encoding.

The option you pick will depend on your goal: if you need a limit for readability, you could go with the number of grapheme clusters. But if you're trying to control storage, you should probably go with codepoints or bytes, since a single grapheme cluster can contain several dozen codepoints (this article has a 65-character one!).

Similarly, when applying string transformations like uppercasing, reversing, or extracting one or more characters, you should be cognizant of these details. Use utilities provided by your platform, and test with different Unicode strings to be sure it works as you expect.

Ensuring uniqueness (or comparing strings)

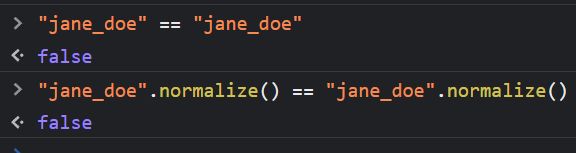

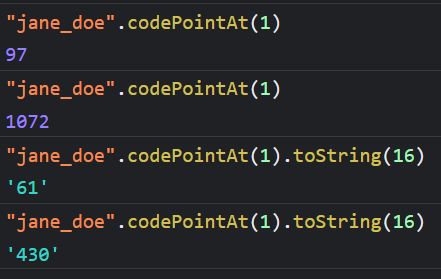

In a Unicode world, simply checking if a string exists in the database or in a list isn't enough. Remember our accented a example? There are two ways of getting that character, and both use different codepoints (and therefore, different bytes). Any regular equality checks will be untrustworthy, leading you to a situation where two users somehow have the same username. The same thing applies when checking if a string contains a certain character.

To get around this, you should normalize the strings before comparing. For example, with JavaScript's string .normalize() method:

And even that's not enough. As this detailed article on usernames points out, some things can't even be fixed by normalization:

This example happens because the second character in each string is different. The first string uses U+0061, our regular letter a, while the second uses the Cyrillic letter а (U+0430). Aargh. Fuckin' Unicode.🤦♀️

This brings us to concerns about...

Security

Our example above is an instance of homographs—strings which look alike but aren't. This is actually a very common occurrence, especially as a phishing tactic. A hacker could send you to yourbank.com, but replace some characters with similar-looking Unicode ones, pointing to a domain they own.

For this reason, domain names are required to be ASCII, and any non-ASCII domains are converted via punycode. For instance, the evil yourbаnk.com is converted to xn--yourbnk-6fg.com in Punycode. (Try clicking on the evil link and check what's in your browser's address bar.)

You can use libraries like punycode.js to convert strings to punycode before checking for equality. And don't forget to normalize as well!

Search

An extension of the above concerns is that if your app supports Unicode and has some kind of text search, you should pay attention to how your search works, especially if you're likely to have non-English users. For instance, Google Docs will include "Männer" in the results when you search for "manner". This is done to make it easier for folks whose keyboards don't have the needed special characters.

One way to implement this is by using a list of homoglyphs (example). When a user searches for "a", you rewrite the search to a OR ä OR á OR ą, and so on. But as this StackOverflow answer points out, that may not even be enough, depending on what you wish to achieve.

You should test that your search system works as you expect. Databases like MySQL have collations that can handle some normalizations for you, but sometimes they can have surprising results.

Building for non-English usage

If you're building an app where you plan to display non-English text, it goes without saying that all of this applies to you. There are several languages that use glyphs outside our Latin alphabet, so keep that in mind. Something as simple as splitting by characters can totally garble a piece of text in a different language (like this guy found out for Tamil).

Further reading

We've really only scratched the surface. There are many more intricacies that go into rendering, encoding and decoding text in the modern world. Some more useful reads:

The .NET docs have a really deep dive on character encodings (.NET focused, but a lot of useful general info).

A compilation of some interesting Unicode codepoints

How the encoding of a file is detected on the web.

I write about my software engineering thoughts and experiments. Want to follow me? I don't have a newsletter; instead, I built Tentacle: tntcl.app/blog.shalvah.me.